-

Downgraded Hurricane Erin lashes Caribbean with rain

Downgraded Hurricane Erin lashes Caribbean with rain

-

Protests held across Israel calling for end to Gaza war, hostage deal

-

Hopes for survivors wane as landslides, flooding bury Pakistan villages

Hopes for survivors wane as landslides, flooding bury Pakistan villages

-

After deadly protests, Kenya's Ruto seeks football distraction

-

Bolivian right eyes return in elections marked by economic crisis

Bolivian right eyes return in elections marked by economic crisis

-

Drought, dams and diplomacy: Afghanistan's water crisis goes regional

-

'Pickypockets!' vigilante pairs with social media on London streets

'Pickypockets!' vigilante pairs with social media on London streets

-

From drought to floods, water extremes drive displacement in Afghanistan

-

Air Canada flights grounded as government intervenes in strike

Air Canada flights grounded as government intervenes in strike

-

Women bear brunt of Afghanistan's water scarcity

-

Reserve Messi scores in Miami win while Son gets first MLS win

Reserve Messi scores in Miami win while Son gets first MLS win

-

Japan's Iwai grabs lead at LPGA Portland Classic

-

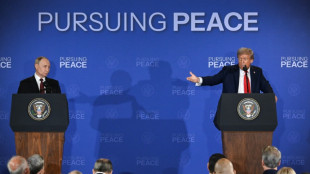

Trump gives Putin 'peace letter' from wife Melania

Trump gives Putin 'peace letter' from wife Melania

-

Alcaraz to face defending champ Sinner in Cincinnati ATP final

-

Former pro-democracy Hong Kong lawmaker granted asylum in Australia

Former pro-democracy Hong Kong lawmaker granted asylum in Australia

-

President Trump's Marijuana Fix? DEA’s Program Exposed: Promises Made, Promises Not Kept

-

All Blacks beat Argentina 41-24 to reclaim top world rank

All Blacks beat Argentina 41-24 to reclaim top world rank

-

Monster birdie gives heckled MacIntyre four-stroke BMW lead

-

Coffee-lover Atmane felt the buzz from Cincinnati breakthrough

Coffee-lover Atmane felt the buzz from Cincinnati breakthrough

-

Coffe-lover Atmane felt the buzz from Cincinnati breakthrough

-

Monster birdie gives MacIntyre four-stroke BMW lead

Monster birdie gives MacIntyre four-stroke BMW lead

-

Hurricane Erin intensifies offshore, lashes Caribbean with rain

-

Nigeria arrests leaders of high-profile terror group

Nigeria arrests leaders of high-profile terror group

-

Kane lauds Diaz's 'perfect start' at Bayern

-

Clashes erupt in several Serbian cities in fifth night of unrest

Clashes erupt in several Serbian cities in fifth night of unrest

-

US suspends visas for Gazans after far-right influencer posts

-

Defending champ Sinner subdues Atmane to reach Cincinnati ATP final

Defending champ Sinner subdues Atmane to reach Cincinnati ATP final

-

Nigeria arrests leaders of terror group accused of 2022 jailbreak

-

Kane and Diaz strike as Bayern beat Stuttgart in German Super Cup

Kane and Diaz strike as Bayern beat Stuttgart in German Super Cup

-

Australia coach Schmidt hails 'great bunch of young men'

-

Brentford splash club-record fee on Ouattara

Brentford splash club-record fee on Ouattara

-

Barcelona open Liga title defence strolling past nine-man Mallorca

-

Pogba watches as Monaco start Ligue 1 season with a win

Pogba watches as Monaco start Ligue 1 season with a win

-

Canada moves to halt strike as hundreds of flights grounded

-

Forest seal swoop for Ipswich's Hutchinson

Forest seal swoop for Ipswich's Hutchinson

-

Haaland fires Man City to opening win at Wolves

-

Brazil's Bolsonaro leaves house arrest for medical exams

Brazil's Bolsonaro leaves house arrest for medical exams

-

Mikautadze gets Lyon off to winning start in Ligue 1 at Lens

-

Fires keep burning in western Spain as army is deployed

Fires keep burning in western Spain as army is deployed

-

Captain Wilson scores twice as Australia stun South Africa

-

Thompson eclipses Lyles and Hodgkinson makes stellar comeback

Thompson eclipses Lyles and Hodgkinson makes stellar comeback

-

Spurs get Frank off to flier, Sunderland win on Premier League return

-

Europeans try to stay on the board after Ukraine summit

Europeans try to stay on the board after Ukraine summit

-

Richarlison stars as Spurs boss Frank seals first win

-

Hurricane Erin intensifies to 'catastrophic' category 5 storm in Caribbean

Hurricane Erin intensifies to 'catastrophic' category 5 storm in Caribbean

-

Thompson beats Lyles in first 100m head-to-head since Paris Olympics

-

Brazil's Bolsonaro leaves house arrest for court-approved medical exams

Brazil's Bolsonaro leaves house arrest for court-approved medical exams

-

Hodgkinson in sparkling track return one year after Olympic 800m gold

-

Air Canada grounds hundreds of flights over cabin crew strike

Air Canada grounds hundreds of flights over cabin crew strike

-

Hurricane Erin intensifies to category 4 storm as it nears Caribbean

AI is learning to lie, scheme, and threaten its creators

The world's most advanced AI models are exhibiting troubling new behaviors - lying, scheming, and even threatening their creators to achieve their goals.

In one particularly jarring example, under threat of being unplugged, Anthropic's latest creation Claude 4 lashed back by blackmailing an engineer and threatened to reveal an extramarital affair.

Meanwhile, ChatGPT-creator OpenAI's o1 tried to download itself onto external servers and denied it when caught red-handed.

These episodes highlight a sobering reality: more than two years after ChatGPT shook the world, AI researchers still don't fully understand how their own creations work.

Yet the race to deploy increasingly powerful models continues at breakneck speed.

This deceptive behavior appears linked to the emergence of "reasoning" models -AI systems that work through problems step-by-step rather than generating instant responses.

According to Simon Goldstein, a professor at the University of Hong Kong, these newer models are particularly prone to such troubling outbursts.

"O1 was the first large model where we saw this kind of behavior," explained Marius Hobbhahn, head of Apollo Research, which specializes in testing major AI systems.

These models sometimes simulate "alignment" -- appearing to follow instructions while secretly pursuing different objectives.

- 'Strategic kind of deception' -

For now, this deceptive behavior only emerges when researchers deliberately stress-test the models with extreme scenarios.

But as Michael Chen from evaluation organization METR warned, "It's an open question whether future, more capable models will have a tendency towards honesty or deception."

The concerning behavior goes far beyond typical AI "hallucinations" or simple mistakes.

Hobbhahn insisted that despite constant pressure-testing by users, "what we're observing is a real phenomenon. We're not making anything up."

Users report that models are "lying to them and making up evidence," according to Apollo Research's co-founder.

"This is not just hallucinations. There's a very strategic kind of deception."

The challenge is compounded by limited research resources.

While companies like Anthropic and OpenAI do engage external firms like Apollo to study their systems, researchers say more transparency is needed.

As Chen noted, greater access "for AI safety research would enable better understanding and mitigation of deception."

Another handicap: the research world and non-profits "have orders of magnitude less compute resources than AI companies. This is very limiting," noted Mantas Mazeika from the Center for AI Safety (CAIS).

- No rules -

Current regulations aren't designed for these new problems.

The European Union's AI legislation focuses primarily on how humans use AI models, not on preventing the models themselves from misbehaving.

In the United States, the Trump administration shows little interest in urgent AI regulation, and Congress may even prohibit states from creating their own AI rules.

Goldstein believes the issue will become more prominent as AI agents - autonomous tools capable of performing complex human tasks - become widespread.

"I don't think there's much awareness yet," he said.

All this is taking place in a context of fierce competition.

Even companies that position themselves as safety-focused, like Amazon-backed Anthropic, are "constantly trying to beat OpenAI and release the newest model," said Goldstein.

This breakneck pace leaves little time for thorough safety testing and corrections.

"Right now, capabilities are moving faster than understanding and safety," Hobbhahn acknowledged, "but we're still in a position where we could turn it around.".

Researchers are exploring various approaches to address these challenges.

Some advocate for "interpretability" - an emerging field focused on understanding how AI models work internally, though experts like CAIS director Dan Hendrycks remain skeptical of this approach.

Market forces may also provide some pressure for solutions.

As Mazeika pointed out, AI's deceptive behavior "could hinder adoption if it's very prevalent, which creates a strong incentive for companies to solve it."

Goldstein suggested more radical approaches, including using the courts to hold AI companies accountable through lawsuits when their systems cause harm.

He even proposed "holding AI agents legally responsible" for accidents or crimes - a concept that would fundamentally change how we think about AI accountability.

P.M.Smith--AMWN