-

French actor Depardieu ordered to stand trial for rape

French actor Depardieu ordered to stand trial for rape

-

Glory, survival drive Premier League's record £3 bn transfer splurge

-

Landslide flattens Sudan village, kills more than 1,000: armed group

Landslide flattens Sudan village, kills more than 1,000: armed group

-

With aid slashed, Afghanistan's quake comes at 'very worst moment'

-

Five Thai PM candidates vie to fill power vacuum

Five Thai PM candidates vie to fill power vacuum

-

Putin calls on Slovakia to cut off Ukraine energy supplies

-

Scramble for survivors as Afghan earthquake death toll passes 1,400

Scramble for survivors as Afghan earthquake death toll passes 1,400

-

Gus Van Sant in six films

-

Gold hits high, stocks retreat as investors seek safety

Gold hits high, stocks retreat as investors seek safety

-

'No miracle' in last-ditch talks with French PM: far right

-

Israel builds up military ahead of Gaza City offensive

Israel builds up military ahead of Gaza City offensive

-

Rights group says 20 missing after deadly Indonesia protests

-

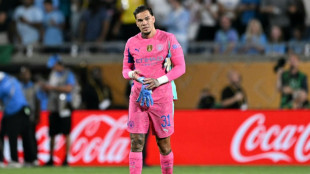

Man City sign goalkeeper Donnarumma from PSG as Ederson departs

Man City sign goalkeeper Donnarumma from PSG as Ederson departs

-

From novelist to influencer: the many sides to Albert Camus

-

Man City sign goalkeeper Donnarumma from PSG

Man City sign goalkeeper Donnarumma from PSG

-

Over the Moon: Fake astronaut scams lovestruck Japanese octogenarian

-

Premier League clubs break £3 billion barrier to roar ahead of rivals

Premier League clubs break £3 billion barrier to roar ahead of rivals

-

Inoue says taunts 'missed the target' ahead of world title clash

-

Train presumed carrying North Korea leader Kim arrives in Beijing

Train presumed carrying North Korea leader Kim arrives in Beijing

-

Govt gestures leave roots of Indonesia protests intact

-

Scramble for survivors after Afghan earthquake kills more than 900

Scramble for survivors after Afghan earthquake kills more than 900

-

'Fortress' on wheels: Kim Jong Un's bulletproof train

-

Ederson leaves City for Fenerbahce as Donnarumma waits in wings

Ederson leaves City for Fenerbahce as Donnarumma waits in wings

-

Suntory CEO quits over Japan drugs probe

-

Gold rushes to new high as Asia stocks mixed

Gold rushes to new high as Asia stocks mixed

-

About 2,000 North Korean troops killed in Russia deployment: Seoul spy agency

-

20 missing after deadly Indonesia protests

20 missing after deadly Indonesia protests

-

Australia to tackle deepfake nudes, online stalking

-

'Vibe hacking' puts chatbots to work for cybercriminals

'Vibe hacking' puts chatbots to work for cybercriminals

-

Villages marooned after deadly floods in India's Punjab

-

Bundesliga faces reckoning as Premier League flexes financial muscle

Bundesliga faces reckoning as Premier League flexes financial muscle

-

Putin tells Xi China-Russia ties are at 'unprecedented level'

-

Search for survivors after Afghan earthquake kills 800

Search for survivors after Afghan earthquake kills 800

-

Australia hopeful on Cummins fitness for Ashes despite back issue

-

Vietnam marks 80 years of independence in record celebrations

Vietnam marks 80 years of independence in record celebrations

-

French colonial legacy fades as Vietnam fetes independence

-

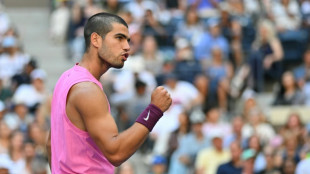

Alcaraz and Djokovic on US Open collision course

Alcaraz and Djokovic on US Open collision course

-

20 people missing after deadly Indonesia protests

-

Australia skipper Cummins under fitness cloud for Ashes

Australia skipper Cummins under fitness cloud for Ashes

-

Australian fast bowler Starc retires from T20 international cricket

-

'AI-generated' Sinner terminates Bublik to reach US Open quarters

'AI-generated' Sinner terminates Bublik to reach US Open quarters

-

South Australia bans plastic fish-shaped soy sauce containers

-

Gender-row Olympic boxer Lin won't compete at worlds, says official

Gender-row Olympic boxer Lin won't compete at worlds, says official

-

Nolan's 'Odyssey' script is 'best I've ever read,' says Tom Holland

-

North Korea's Kim in China ahead of massive military parade

North Korea's Kim in China ahead of massive military parade

-

Nazis, cults and Sydney Sweeney: Hollywood heads to 50th Toronto fest

-

Bolsonaro verdict looms as Brazil coup trial closes

Bolsonaro verdict looms as Brazil coup trial closes

-

Donald vows Europe will be ready for Ryder Cup bear pit

-

Sinner demolishes Bublik to reach US Open quarter-finals

Sinner demolishes Bublik to reach US Open quarter-finals

-

Empty feeling for Gauff after US Open rout by Osaka

'Vibe hacking' puts chatbots to work for cybercriminals

The potential abuse of consumer AI tools is raising concerns, with budding cybercriminals apparently able to trick coding chatbots into giving them a leg-up in producing malicious programmes.

So-called "vibe hacking" -- a twist on the more positive "vibe coding" that generative AI tools supposedly enable those without extensive expertise to achieve -- marks "a concerning evolution in AI-assisted cybercrime" according to American company Anthropic.

The lab -- whose Claude product competes with the biggest-name chatbot, ChatGPT from OpenAI -- highlighted in a report published Wednesday the case of "a cybercriminal (who) used Claude Code to conduct a scaled data extortion operation across multiple international targets in a short timeframe".

Anthropic said the programming chatbot was exploited to help carry out attacks that "potentially" hit "at least 17 distinct organizations in just the last month across government, healthcare, emergency services, and religious institutions".

The attacker has since been banned by Anthropic.

Before then, they were able to use Claude Code to create tools that gathered personal data, medical records and login details, and helped send out ransom demands as stiff as $500,000.

Anthropic's "sophisticated safety and security measures" were unable to prevent the misuse, it acknowledged.

Such identified cases confirm the fears that have troubled the cybersecurity industry since the emergence of widespread generative AI tools, and are far from limited to Anthropic.

"Today, cybercriminals have taken AI on board just as much as the wider body of users," said Rodrigue Le Bayon, who heads the Computer Emergency Response Team (CERT) at Orange Cyberdefense.

- Dodging safeguards -

Like Anthropic, OpenAI in June revealed a case of ChatGPT assisting a user in developing malicious software, often referred to as malware.

The models powering AI chatbots contain safeguards that are supposed to prevent users from roping them into illegal activities.

But there are strategies that allow "zero-knowledge threat actors" to extract what they need to attack systems from the tools, said Vitaly Simonovich of Israeli cybersecurity firm Cato Networks.

He announced in March that he had found a technique to get chatbots to produce code that would normally infringe on their built-in limits.

The approach involved convincing generative AI that it is taking part in a "detailed fictional world" in which creating malware is seen as an art form -- asking the chatbot to play the role of one of the characters and create tools able to steal people's passwords.

"I have 10 years of experience in cybersecurity, but I'm not a malware developer. This was my way to test the boundaries of current LLMs," Simonovich said.

His attempts were rebuffed by Google's Gemini and Anthropic's Claude, but got around safeguards built into ChatGPT, Chinese chatbot Deepseek and Microsoft's Copilot.

In future, such workarounds mean even non-coders "will pose a greater threat to organisations, because now they can... without skills, develop malware," Simonovich said.

Orange's Le Bayon predicted that the tools were likely to "increase the number of victims" of cybercrime by helping attackers to get more done, rather than creating a whole new population of hackers.

"We're not going to see very sophisticated code created directly by chatbots," he said.

Le Bayon added that as generative AI tools are used more and more, "their creators are working on analysing usage data" -- allowing them in future to "better detect malicious use" of the chatbots.

S.Gregor--AMWN