-

Barca's Yamal nets hat-trick in Villarreal romp, Atletico go third

Barca's Yamal nets hat-trick in Villarreal romp, Atletico go third

-

Trump says Khamenei is dead after Israel, US attack Iran

-

Iran's Khamenei: ruthless revolutionary atop Islamic republic

Iran's Khamenei: ruthless revolutionary atop Islamic republic

-

Inter continue Scudetto march after Champions League humbling

-

Questions cloud Trump's case for war against Iran

Questions cloud Trump's case for war against Iran

-

Latest developments in US, Israel strikes on Iran

-

Fears of Mideast war as US-Iran conflict flares

Fears of Mideast war as US-Iran conflict flares

-

Guardiola expects short absence for injured Haaland

-

Liverpool's set play transformation a return to 'normal' for Slot

Liverpool's set play transformation a return to 'normal' for Slot

-

Man City win to close on Arsenal as Liverpool hit five

-

Kane bags brace as Bayern end Dortmund's title hopes

Kane bags brace as Bayern end Dortmund's title hopes

-

Semenyo sinks Leeds as Man City close gap on Arsenal

-

Last-gasp Lukaku saves Napoli's blushes at rock-bottom Verona

Last-gasp Lukaku saves Napoli's blushes at rock-bottom Verona

-

Could the US-Israel war on Iran drag on?

-

Iranians abroad jittery but jubilant at US, Israeli strikes

Iranians abroad jittery but jubilant at US, Israeli strikes

-

Pakistan 'have underperformed' says Agha after T20 World Cup exit

-

Under-strength Toulouse overpower Montauban in Top 14

Under-strength Toulouse overpower Montauban in Top 14

-

Vietnam AI law takes effect, first in Southeast Asia

-

Brazil's Lula visits flood zone as death toll from landslides hits 70

Brazil's Lula visits flood zone as death toll from landslides hits 70

-

New Zealand into T20 World Cup semis as Sri Lanka avoid big Pakistan loss

-

Medvedev wins Dubai title as Griekspoor withdraws

Medvedev wins Dubai title as Griekspoor withdraws

-

First Yamal hat-trick helps Liga leaders Barcelona beat Villarreal

-

Liverpool hit five past West Ham, Haaland-less City face Leeds test

Liverpool hit five past West Ham, Haaland-less City face Leeds test

-

Van der Poel romps to cobbled classic win

-

Republicans back Trump, Democrats attack 'illegal' Iran war

Republicans back Trump, Democrats attack 'illegal' Iran war

-

Madonna is surprise attraction at Dolce & Gabbana Milan show

-

Farhan keeps Pakistan hopes alive as they post 212-8 against Sri Lanka

Farhan keeps Pakistan hopes alive as they post 212-8 against Sri Lanka

-

Afghanistan says civilians killed in Pakistan air strikes

-

Tug of war: how US presidents battle Congress for military powers

Tug of war: how US presidents battle Congress for military powers

-

Residents flee as Iran missiles stun peaceful Gulf cities

-

Streets empty and shops close as US strikes confirm Iranian fears

Streets empty and shops close as US strikes confirm Iranian fears

-

Israelis shelter underground as Iran fires missiles

-

Bournemouth held by Sunderland in blow to European bid

Bournemouth held by Sunderland in blow to European bid

-

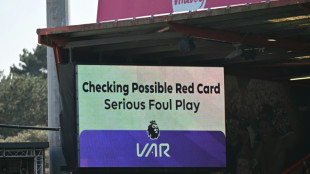

VAR expanded to include second bookings and corners for World Cup

-

Iranians in Istanbul jittery but jubilant at US, Israeli strikes

Iranians in Istanbul jittery but jubilant at US, Israeli strikes

-

Congo-Brazzaville president vows to keep power as campaign kicks off

-

US, Israel launch strikes on Iran, Tehran hits back across region

US, Israel launch strikes on Iran, Tehran hits back across region

-

Germany's Aicher wins women's super-G in Soldeu

-

Fight against terror: Trump threatens Tehran's mullahs

Fight against terror: Trump threatens Tehran's mullahs

-

US and Israel launch strikes on Iran, explosions reported across region

-

Iran's Khamenei: ruthless revolutionary at apex of Islamic republic

Iran's Khamenei: ruthless revolutionary at apex of Islamic republic

-

In Iran attack, Trump seeks what he foreswore -- regime change

-

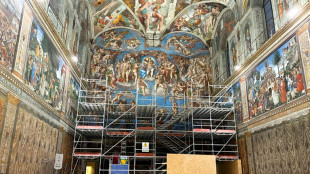

Climate change forces facelift for Michelangelo masterpiece

Climate change forces facelift for Michelangelo masterpiece

-

Trump says US aims to destroy Iran's military, topple government

-

Acosta wins season-opening MotoGP sprint after Marquez penalty

Acosta wins season-opening MotoGP sprint after Marquez penalty

-

US and Israel launch strikes against Iran

-

Afghanistan says Pakistan fighter jet down as cross-border strikes flare

Afghanistan says Pakistan fighter jet down as cross-border strikes flare

-

Kerr says only '85 percent' fit for Women's Asian Cup

-

Messi's Inter Miami to visit White House: US media

Messi's Inter Miami to visit White House: US media

-

Thunder beat Nuggets in overtime on Gilgeous-Alexander's return

Generative AI's most prominent skeptic doubles down

Two and a half years since ChatGPT rocked the world, scientist and writer Gary Marcus still remains generative artificial intelligence's great skeptic, playing a counter-narrative to Silicon Valley's AI true believers.

Marcus became a prominent figure of the AI revolution in 2023, when he sat beside OpenAI chief Sam Altman at a Senate hearing in Washington as both men urged politicians to take the technology seriously and consider regulation.

Much has changed since then. Altman has abandoned his calls for caution, instead teaming up with Japan's SoftBank and funds in the Middle East to propel his company to sky-high valuations as he tries to make ChatGPT the next era-defining tech behemoth.

"Sam's not getting money anymore from the Silicon Valley establishment," and his seeking funding from abroad is a sign of "desperation," Marcus told AFP on the sidelines of the Web Summit in Vancouver, Canada.

Marcus's criticism centers on a fundamental belief: generative AI, the predictive technology that churns out seemingly human-level content, is simply too flawed to be transformative.

The large language models (LLMs) that power these capabilities are inherently broken, he argues, and will never deliver on Silicon Valley's grand promises.

"I'm skeptical of AI as it is currently practiced," he said. "I think AI could have tremendous value, but LLMs are not the way there. And I think the companies running it are not mostly the best people in the world."

His skepticism stands in stark contrast to the prevailing mood at the Web Summit, where most conversations among 15,000 attendees focused on generative AI's seemingly infinite promise.

Many believe humanity stands on the cusp of achieving super intelligence or artificial general intelligence (AGI) technology that could match and even surpass human capability.

That optimism has driven OpenAI's valuation to $300 billion, unprecedented levels for a startup, with billionaire Elon Musk's xAI racing to keep pace.

Yet for all the hype, the practical gains remain limited.

The technology excels mainly at coding assistance for programmers and text generation for office work. AI-created images, while often entertaining, serve primarily as memes or deepfakes, offering little obvious benefit to society or business.

Marcus, a longtime New York University professor, champions a fundamentally different approach to building AI -- one he believes might actually achieve human-level intelligence in ways that current generative AI never will.

"One consequence of going all-in on LLMs is that any alternative approach that might be better gets starved out," he explained.

This tunnel vision will "cause a delay in getting to AI that can help us beyond just coding -- a waste of resources."

- 'Right answers matter' -

Instead, Marcus advocates for neurosymbolic AI, an approach that attempts to rebuild human logic artificially rather than simply training computer models on vast datasets, as is done with ChatGPT and similar products like Google's Gemini or Anthropic's Claude.

He dismisses fears that generative AI will eliminate white-collar jobs, citing a simple reality: "There are too many white-collar jobs where getting the right answer actually matters."

This points to AI's most persistent problem: hallucinations, the technology's well-documented tendency to produce confident-sounding mistakes.

Even AI's strongest advocates acknowledge this flaw may be impossible to eliminate.

Marcus recalls a telling exchange from 2023 with LinkedIn founder Reid Hoffman, a Silicon Valley heavyweight: "He bet me any amount of money that hallucinations would go away in three months. I offered him $100,000 and he wouldn't take the bet."

Looking ahead, Marcus warns of a darker consequence once investors realize generative AI's limitations. Companies like OpenAI will inevitably monetize their most valuable asset: user data.

"The people who put in all this money will want their returns, and I think that's leading them toward surveillance," he said, pointing to Orwellian risks for society.

"They have all this private data, so they can sell that as a consolation prize."

Marcus acknowledges that generative AI will find useful applications in areas where occasional errors don't matter much.

"They're very useful for auto-complete on steroids: coding, brainstorming, and stuff like that," he said.

"But nobody's going to make much money off it because they're expensive to run, and everybody has the same product."

T.Ward--AMWN